- Introduction

- ChatGPT: The Future of Law

- Advantages of ChatGPT

- Disadvantages of ChatGPT

- Risks associated with ChatGPT

- Impact of ChatGPT on the legal industry

- Is the deployment of ChatGPT an issue?

- Artificial intelligence versus intellectual property

- ChatGPT: Game changing add-on or disruption?

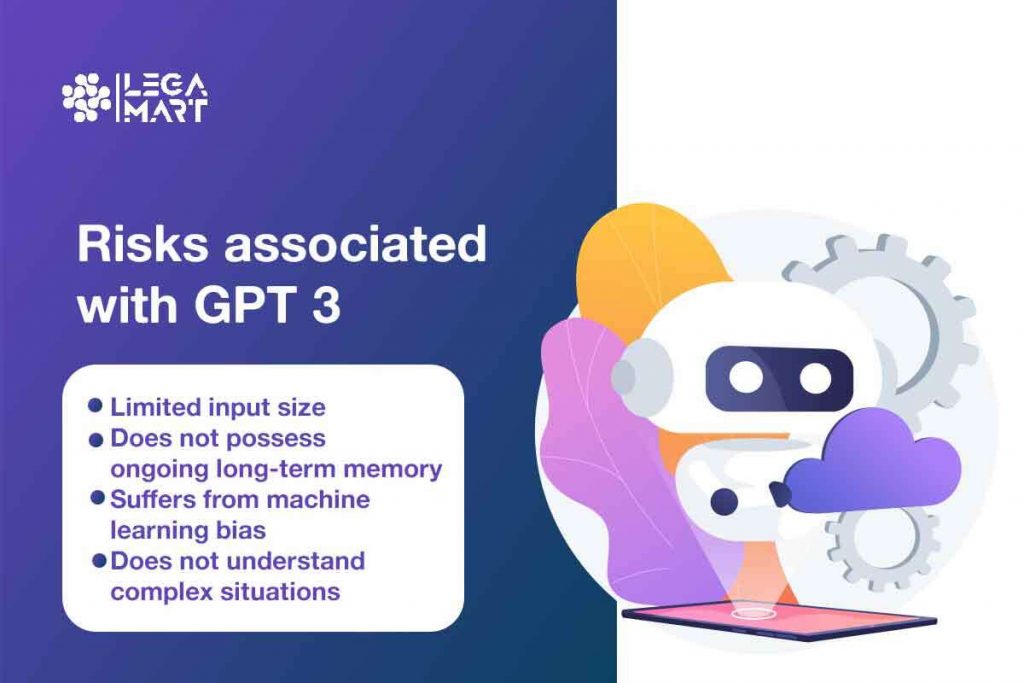

- Last but not least: Risks associated with GPT3

- Risks associated with GPT3

- Conclusion

Introduction

With the advent of technology and innovations coming up every year, the field of artificial intelligence has grown manifold and expanded its market like no other. The artificial intelligence industry leaves our minds boggled up every time with a new scientific experiment and upgrading itself with the use of the latest technology. ChatGPT is the result of one such innovation.

This artificially made bot can answer questions, deliver essays and poems, and write self-generated computer programs. It’s one of the tools which gathers information from all knowledgeable domains and incorporates training data from them. Since it is an OpenAI robot, it will solve all your queries and questions, but it cannot replace the task that a human can only handle.

ChatGPT: The Future of Law

ChatGPT can perform impressive logical and analytical tasks but doesn’t serve the same logical mind as a human brain. ChatGPT works on a set of computer programs; hence, it cannot perform tasks requiring an analytical brain to solve legal issues or find loopholes in the law. Even though the technology is in the very beginning phase of its development, people have already started using it in many fields. While some people find it a reliable source for generating documents, some don’t.

One of the legal risks which come in handy with ChatGPT is its potential to infringe on intellectual property rights. Since the technology works on a large amount of textual data collected through different sources, it’s a bigger threat than that it might extract copyrighted works and work upon them, leading to legal action against the user of the concerned ChatGPT. This risk extends to its use on social media platforms where copyright infringement is a prevalent concern.

Additionally, it can generate defamatory content as it can copy data without understanding and comprehending it as a human. Therefore, it can’t decide between right and wrong.

ChatGPT can also share personal data with its other users and breach the fundamental right of privacy of an individual. It will also be the breach of the personal data of an individual.

This technology might also create fake news and misleading content through conversational texts, hence, increasing the cases of legal action against it and creating a blurry and unreliable image of the user on the internet.

Advantages of ChatGPT

ChatGPT has been developed and trained using Reinforcement Learning from Human Feedback (RLHF) technology, although the data collection method has been set up differently. It has been trained using human AI trainers’ conversations, who have played the parts of the user and an AI assistant. It is a fine-tuned model in the GPT 3.5 series, which completed its training at the start of 2022.

- ChatGPT is a trained model that can perform interaction in a conversational way.

- The dialogue format in which it has been set up allows it to answer questions, accept its mistakes, and point out absurd or incorrect sentences.

- It is the upgraded version of InstructGPT, which is trained in a way that follows an instruction at a prompt and provides a detailed response to any problem.

Disadvantages of ChatGPT

With every technology bringing its pros and cons, ChatGPT is no different. It is one of the fastest-growing OpenAI technologies, but it has its own set of limitations.

- Since it’s a machine, it can only generate answers based on the programs and data it collects. It’s not a human demonstrator but rather an artificial technology.

- ChatGPT may change its result even with the slightest change in phrasing or attempting the same inference multiple times.

- It might use repetitive language, given its limited knowledge based on the textual conversations present in the databases.

- In case of a user prompts an ambiguous query, it might ask to clarify the question rather than making suggestions itself.

- Although it uses the ModerationAPI technology to warn or block sensitive or unsafe content, it still might catch some harmful instructions or biased opinions.

Risks associated with ChatGPT

Over the years, several concerns have been raised concerning underlying bias ranging from derogatory language, racial discrimination, and violent depictions to gender stereotyping in AI models. ChatGPT is also accused of inheriting potential AI biases.

570 GB or 300 billion words of enormous data is used to train ChatGPT. Certain highlighted problems are that information on which ChatGPT is prone to regressive bias, filters used to make the dataset better are not 100% accurate, and researcher data is not diverse as people majorly control it. Ethics in AI is essential to weed out inherent bias from the machine learning algorithm while human programmers create more AI-based systems.

Another concern is plagiarism. It has been found that image-generation tools are involved in plagiarising the work of others. In the context of Chat GPT, there are significantly less sophisticated text plagiarism detection tools that can be used to detect an advanced form of plagiarism is done.

Further, the last limitation is potential inaccuracies. For example, users of Chat GPT tools have stated that the text produced consists of wrong conclusions & analogies, or there is no substance in the output, or the code does not run or is inefficient.

Impact of ChatGPT on the legal industry

After the release of this AI-powered language generation model, many speculations took place in the legal industry as to whether Open AI’s new offering Chat GPT would threaten the legal sector jobs.

In short, probably not.

As per our view, the potential of ChatGPT is infinite and will play a transformative role in the legal industry. It can benefit lawyers by swiftly completing routine and monotonous tasks with minimal scrutiny. Following are some of how the legal sector can be impacted-

- Automating legal research: Models like ChatGPT can be trained on data and legal texts. This will facilitate lawyers in finding relevant information and in generating summaries of legal documents. This will ultimately result in making more well-informed decisions.

- Legal Chatbots: Lawyers can use chatbots to answer routine questions for clients and provide information. This could save some valuable time for lawyers by allowing them to be more efficient by focusing on more complex and vital tasks.

- Producing legal documents: Legal teams and lawyers can quickly generate contracts and legal documents after training GPT models on existing legal documents. AI-based systems can further be utilized in reviewing legal documents and discovery.

- Legal services: AI technology has the potential to provide legal services at a lower cost to a broad range of clients.

Is the deployment of ChatGPT an issue?

Deploying AI applications in legal enterprises are riddled with challenges related to security, cost and trust. As far as the use of ChatGPT is concerned, this also escalates certain concerns related to its intricate structure.

For legal departments, employing ChatGPT necessitates hardware to manage the voluminous data required to generate outcomes similar to AI models. It also raises questions about security and ethical concerns and can prove overwhelming for legal enterprises.

Also, in situations where in-depth legal study and research are required, ChatGPT is not a favourable tool as it does not possess judgement or the understanding to interpret legal precedents and principles like a human lawyer.

Artificial intelligence versus intellectual property

ChatGPT, a recently launched Chatbot creation and social media sensation, is based on Open AI’s platform, including large language models. Millions of users are utilizing this instrument for various purposes, such as writing briefs, creating contracts and many more.

However, with the increasing emergence of AI chatbots such as ChatGPT, it is significant to address issues related to IP and its application to these next-generation technologies.

For copyright law, one of the critical issues at stake is who should be considered “creators” of the content and to what extent. It is hard to distinguish between content produced by humans and AI-generated content as new technologies become more sophisticated. This stipulates another concern that AI chatbots can be entitled to similar IP protections as human authors.

For the purpose of protection under Copyright law, “original works of authorship” must be produced by the human creator in a tangible form, such as a digital file, painting, physical form or book.

It is a grey area whether the content produced by AI can be recognized as original as well as fixed in a tangible form and provided copyright protection. If humans use AI system apparatus to create work, they must be considered owners and creators. Alternately, If AI produces original content without human intervention, it should be considered as the owner and creator of the work. Therefore, urgent reforms in respective IP laws are the need of the hour so that the copyright protection given to AI chatbots is respected.

Additionally, another crucial risk is inadvertent or intentional content generation based on pre-existing work that results in IP infringement of others. This can be explained by this recent instance when Instagram got flooded with posts of cartoonish illustrations of people who resemble different characters.

They were generated through AI programs on the “Lensa app” that use an online database of images of art belonging to human artists without asking for their consent. This has resulted due to unethical training and utilization of the intellectual property of others. The question in hand is that ‘Does AI Art come into the ambit of IP theft as copyrights are infringed without compensating or crediting artisans for their work?’

ChatGPT: Game changing add-on or disruption?

ChatGPT can potentially reduce labor costs by effectively enhancing the quality of legal work and accomplishing mundane and repetitive tasks. It is also potentially leading to cost savings for clients and legal professionals. As AI tech is advancing rapidly, the possibility of replacing lawyers cannot be completely stricken off.

But the chances of such AI technology superseding lawyers are still far-fetched in the foreseeable future. So let’s dive deep into some of the reasons:

- Chat GPT has limited memory: If lawyers use Chat GPT, their capacity to provide accurate legal advice is significantly impacted as this next-generation AI tech cannot retain context exceeding 1500 words.

- Chat GPT provides incorrect and outdated information: As Chat GPT is bereft of a strong temporal sense, it often provides inaccurate and nonsensical information that is not in accordance with current laws and regulations. It is also deprived of citations and references, which assures the trustworthiness of the output. Therefore, lawyers that rely on Chat GPT might face malpractice consequences as they owe accountability and a high standard of care to clients.

- Open AI raises data security concerns: Data security and data privacy are crucial limitations that arise from the licensing policies and operating practices of Open AI. The commercial sensitive or client-privileged information shared to Chat GPT can be accessed by unauthorized parties as Open AI might retain it. Therefore, law firms must use Chat GPT with caution.

- Chat GPT lacks creative and strategic output: The output derived from the Chat GPT’s performance depends on the training data fed by the programmer. However, commercial lawyers require human experience that can only be derived from the practice over the years. AI is still not programmed to handle tasks in which strategic advice is given based on client-specific risks or services involving complex deal structuring, legal advisory and transactional drafting.

Last but not least: Risks associated with GPT3

ChatGPT is more effective than GPT3 for legal advice because it has been trained on the massive scale of data sets and it uses pattern recognition to create similar samples as required. It has moved AI one step closer to the manner human brain works.

Secondly, ChatGPT can easily be trained in legal languages. Therefore, it provides great assistance in legal discovery, and legal translation and independently answers the questions of clients. Further, it can easily draft legal documents and contracts with the help of specific kinds of patterns acquired through the algorithm.

Risks associated with GPT3

- Limited input size: GPT 3 cannot deal with larger amounts of input text for the desired output. It also experiences slow inference time as it takes a long time to generate the results.

- Pre-trained: It does not have the ability to learn from past interactions since it does not possess ongoing long-term memory.

- Biases: It suffers from machine learning bias. As the model relies on internet text, it is prone to biased output that humans exhibit in the online data. Also, the generated text produces excessive hate speech, fake news, and conspiracy theories.

- Unreliable interpreter: GPT 3 does not understand complex situations that require social/physical or any other reasoning while generating outcomes.

Conclusion

Even though the technology used in ChatGPT happens to be useful and would reduce the workload of the user, it lacks provide desired results in a judgment-delivering process. It can’t decide between right and wrong, nor does it have a human conscience. It can only generate texts gathered from many sources from all over the internet.

With the attached risks of ChatGPT infringing on the intellectual property rights of a user, publishing defamatory and controversial content, generating fake and misleading data and breaching the data privacy of a person on the internet, it would be safe to say that this technology should be used with caution.

Users should keep in mind the legal risks attached to it and use it more ethically, lawfully and responsibly. They are advised to provide feedback on using ChatGPT and what else can be done to improve the technology. Any feedback that offers suggestions regarding harmful outputs and what the technology lacks in its safety and ethical procedures should make ChatGPT reduce technological or legal risks.

AI technology, such as ChatGPT, must be regulated and governed so that the legal and ethical concerns related to fairness and accountability are addressed. Policymakers, technologists and lawyers must strategize and develop appropriate legal frameworks so that AI can be used to generate the required output.

To conclude, the possibility of envisioning a future where law firms incorporate such AI models is a distant dream and can only be achieved by leveraging ChatGPT within commercial law. An ample amount of resources are required by law firms to train the content so that it can be tailored in accordance with the audience and context. They also need to be fully transparent about the utilization of ChatGPT in the creation of specific tasks.